code_aster cluster MPI execution

On cloudHPC it is possible to execute code_aster cluster with OpenMPI. This means the process is going to be divided among the cores available on the instance so your simulation can proceed faster.

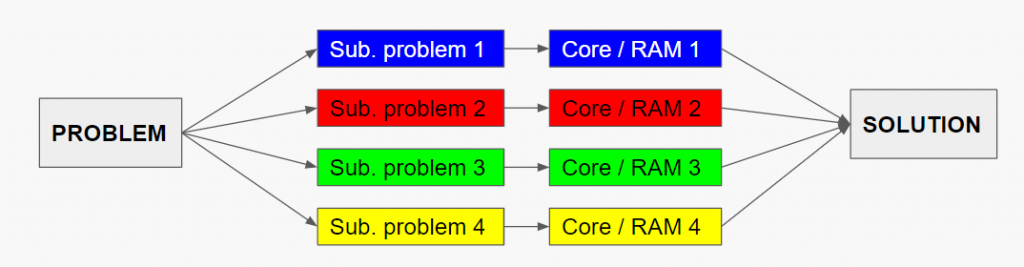

When executing MPI processes it is mandatory to “split” the mesh: the parallelization in fact works that the simulation is actually divided into sub-simulation, each of which is assigned to aspecific CORE to be solved as schetched in the following image.

Having said this, it is important the user defines some basic info in his input files so the system can handle this situation in code_aster cluster. The default configuration doesn’t allow to run your analysis in a code_aster cluster. Let’s have a look at the configurations.

.export file

For the export file you do not need to do anything. It’s cloudHPC that takes care of it

.comm file

The comm file reports the main sequence of operations to perform by code_aster. In this file there are two parts where you have to add updates. The first is AFFE_MODELE, the function that assign the model to the mesh. In this one has to specify the DISTRIBUTION option with the core_number to use. The resulting formula looks like this:

model = AFFE_MODELE( AFFE=( options ), MAILLAGE=mesh,

DISTRIBUTION=_F( METHODE='SOUS_DOMAINE',

NB_SOUS_DOMAINE=core_number,) )

After that also the solver you are going to use needs some updates. Saying you use STAT_NON_LINE solver, it’s through the SOLVEUR command that you can specify one of the two parallel solvers: MUMPS or PETSC.

#MUMPS solver

stat_non_line = STAT_NON_LINE( other_data,

SOLVEUR=_F( METHODE='MUMPS', MATR_DISTRIBUEE='OUI'),

)

#PETSC solver

stat_non_line = STAT_NON_LINE( other_data,

SOLVEUR=_F(METHODE='PETSC', PRE_COND='LDLT_INC',

ALGORITHME='CG', RESI_RELA=1.E-08),

)

To update these info you can use our online tool to edit text files.

Execution on cloudHPC code_aster cluster

Once you complete the setup of the two files mentioned above, you are ready to execute code_aster on the cluster. The fully MPI version of code_aster at the moment is the 14.6. So upload every file on the storage and execute the solver named codeAster-14.6. This automatially detects the configuration of your analysis and launches a fully parallel simulation.

CloudHPC is a HPC provider to run engineering simulations on the cloud. CloudHPC provides from 1 to 224 vCPUs for each process in several configuration of HPC infrastructure - both multi-thread and multi-core. Current software ranges includes several CAE, CFD, FEA, FEM software among which OpenFOAM, FDS, Blender and several others.

New users benefit of a FREE trial of 300 vCPU/Hours to be used on the platform in order to test the platform, all each features and verify if it is suitable for their needs

1 Comment

Scalability performance code_aster Vs calculiX - Cloud HPC · 2 October 2024 at 10:28 am

[…] different configuration where the instance used is always a 32 vCPU with standard RAM while the input files were configured differently in order to use threads or […]