Decoding Performance: A Scalability Showdown Between CalculiX and Code_Aster

In the demanding world of Finite Element Analysis (FEA), performance is king. When tackling complex engineering problems, especially with large datasets and intricate geometries, how efficiently your software scales with increasing computational resources can make or break your analysis time. This is precisely what we set out to investigate: a deep dive into the scalability of two prominent open-source FEA solvers: CalculiX and Code_Aster.

Our journey was driven by a common pain point for many users of open-source software: the sheer number of configuration options. With variables like geometry, mesh, loads, and conditions, it’s easy to get lost in the labyrinth of possibilities. To bring clarity, we performed a rigorous study, testing over 300 variations of the same FEA problem to establish some practical guidelines for users, even if they are highly specific to our benchmark.

Understanding Scalability and Speedup

Before we dive into the results, let’s quickly define our key metrics:

- Scalability: Simply put, scalability is about how much the solution time decreases as we increase the number of hardware resources (like CPU cores) used.

- SpeedUp (S): This measures how much faster a problem can be solved when increasing the number of CPUs. The formula is

S = T1 / TN, where T1 is the time taken with one CPU and TN is the time taken with N CPUs. - Efficiency (E): This tells us how effectively the speedup is achieved relative to the increase in CPUs. The formula is

E = S / N = T1 / (N * TN).

As we increase the number of CPUs, speedup generally increases until a certain limit is hit. Pushing beyond this limit can lead to diminishing returns, meaning low efficiency, no speedup at all, or even a reduction in performance. This limit, however, is not fixed and depends on several factors, including the software version, hardware used, and the nature of the simulation (e.g., the models involved and the mesh density).

Our Testbed: Hardware and Software

To conduct our experiments, we utilized a Cloud HPC environment, specifically leveraging:

- CPUs: AMD EPYC 7B13 @ 2.8GHz and Intel(R) Xeon(R) PLATINUM 8581C CPU @ 2.9GHz. We explored configurations with varying core counts and threads per core (e.g.,

XX-YYwhere XX is the number of cores and YY is the number of threads per core). - RAM: 8 GB per vCPU allocated.

On the software front, we tested:

- Code_Aster 17.0: Utilizing a Singularity container compiled in-house.

- CalculiX 2.21: Publicly available version, as well as versions compiled in-house with PARDISO (v2.19) and PARDISO with MPI (v2.18).

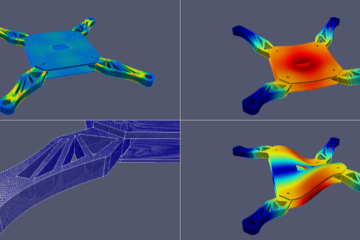

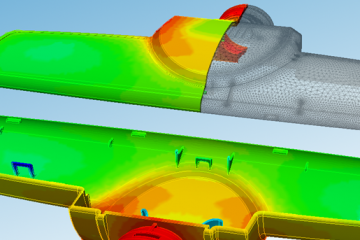

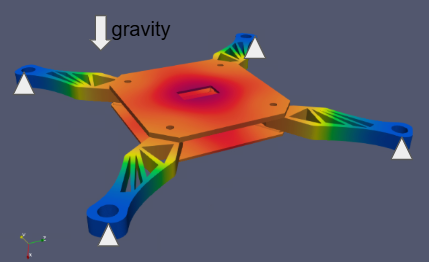

The FEM Model: A Consistent Benchmark

To ensure a fair comparison, we employed a single, well-defined FEA model:

- Analysis Type: Static linear analysis.

- Elements: 3D elements with a tetrahedral mesh.

- Loading: Constrained with gravity force only.

- Post-processing: Not considered; only displacement was calculated.

- Size: Approximately 3,984,763 cells and 865,432 nodes.

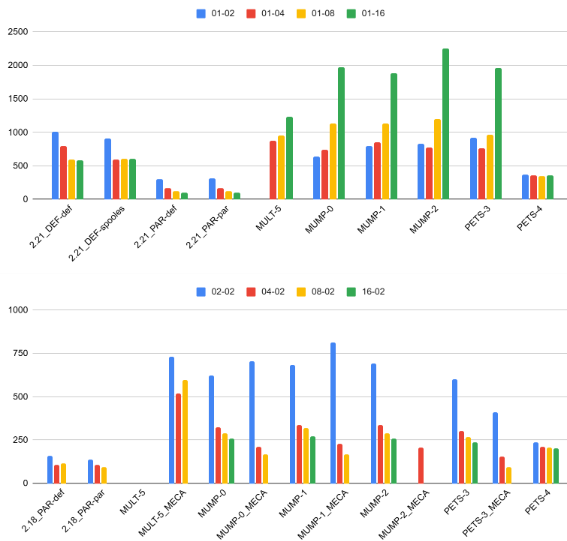

We also explored different solver configurations within both Code_Aster and CalculiX, including various MUMPS and PETSc options for Code_Aster, and default, Spooles, PARDISO MultiThread, and PARDISO MPI for CalculiX.

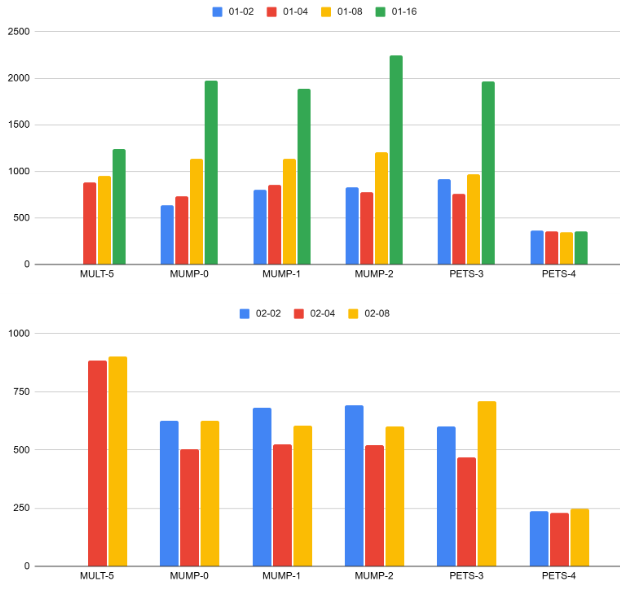

Key Findings and Observations:

Our extensive testing revealed some compelling trends:

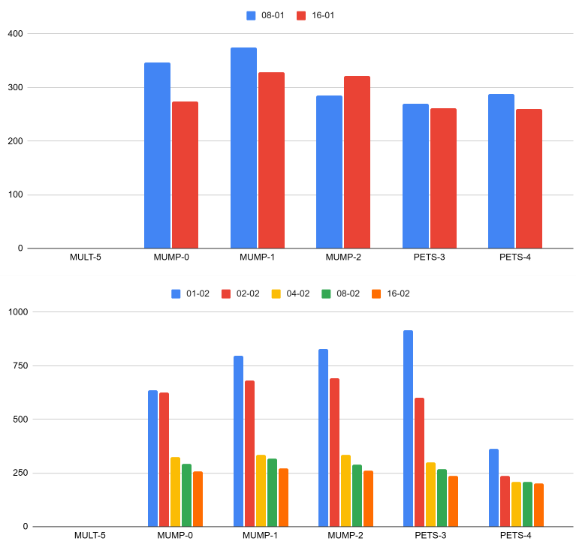

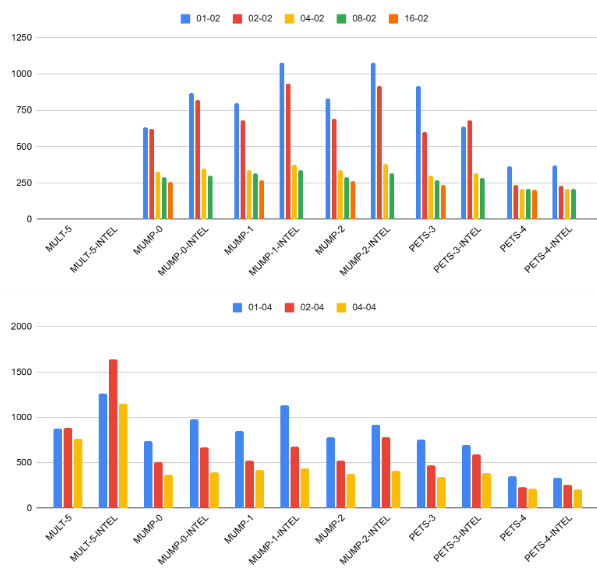

| 1. Code Aster Scalability with Hyperthreading: Increasing the number of threads in CalculiX showed time reductions, but this was primarily observed with a limited number of threads per core (up to 4 threads per core). Specific solver configurations like PETS-4 and MULT-5 / MUMP-2 showed the most promising hyperthread scalability within this range. |  |

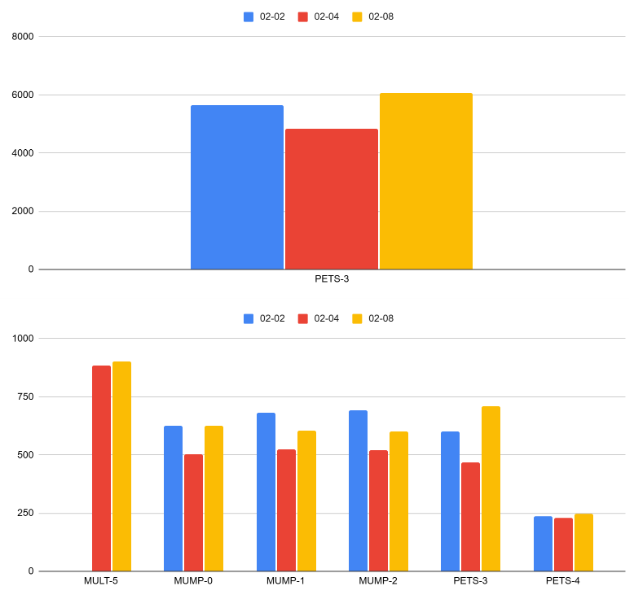

| 2. Code Aster Scalability with Multi-Core: The time reduction with increasing CPU cores was most significant when combined with 2 threads per core. Similar to hyperthreading, certain solver configurations like PETS-4 and PETS-3 demonstrated better multi-core scalability under these conditions. |  |

| 3. Intel vs. AMD Performance: When comparing Intel and AMD processors, we found no major difference in computational speed for the tested configurations. The primary distinction lay in the LLC (Last Level Cache) allocation, which didn’t translate into significant performance disparities in our benchmark. |  |

| 4. NITER and Scalability: Testing with NITER = 10 (both hyperthread and multicore) provided further insights into how the number of iterations affects scalability. The results generally aligned with previous observations, with specific solvers showing better scaling tendencies. |  |

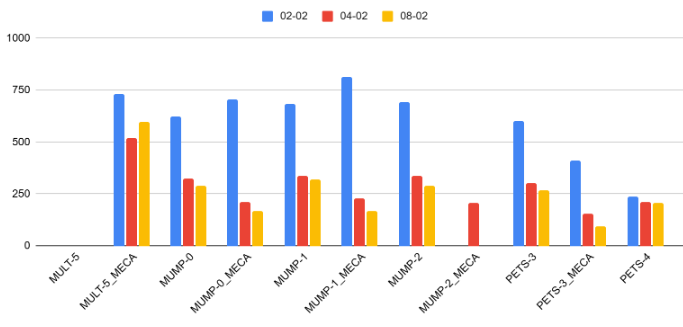

| 5. STAT vs. MECA Scalability: Comparing MECA_STATIQUE and STAT_NON_LINE solver types in Code_Aster, we observed that MECA_STATIQUE generally offered better scalability across various configurations. |  |

| 6. CalculiX vs. Code_Aster Comparison: This was a crucial part of our study. We observed that CalculiX, particularly with its PARDISO solver (both with and without MPI), often demonstrated superior scalability compared to Code_Aster in our benchmark. CalculiX with PARDISO could effectively scale up to 200,000 nodes per core, while Code_Aster’s thread scalability had a more pronounced limit (around 2-4 threads, with some solvers reaching up to 100,000 nodes per core but with significant RAM consumption). |  |

Conclusions and Recommendations:

Our in-depth analysis leads to these key takeaways:

For Code_Aster:

- MECA_STATIQUE is the preferred solver for better scalability.

- PETSc solvers also show good performance.

- Be mindful of thread limits; excessive threads (beyond 2, and at most 4) might not yield proportional speedups and can lead to increased RAM consumption.

- Intel and AMD architectures showed comparable performance in our tests.

For CalculiX:

- The PARDISO solver (with or without MPI) is a strong contender for achieving excellent scalability.

- CalculiX demonstrates impressive scalability, handling up to 200,000 nodes per core effectively.

This study underscores the importance of understanding your software’s scalability characteristics and how they interact with your hardware and the specific problem you’re trying to solve. While our findings are based on a specific benchmark, they offer valuable insights for users looking to optimize their FEA workflows with open-source tools.

Have you had similar experiences with CalculiX or Code_Aster? Share your thoughts and findings in the comments below!

CloudHPC is a HPC provider to run engineering simulations on the cloud. CloudHPC provides from 1 to 224 vCPUs for each process in several configuration of HPC infrastructure - both multi-thread and multi-core. Current software ranges includes several CAE, CFD, FEA, FEM software among which OpenFOAM, FDS, Blender and several others.

New users benefit of a FREE trial of 300 vCPU/Hours to be used on the platform in order to test the platform, all each features and verify if it is suitable for their needs