Split FDS Mesh using BlenderFDS

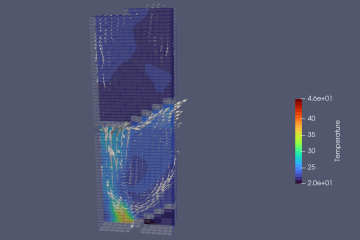

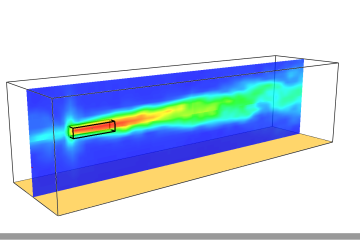

A fundamental stage in FDS to achieve a good scalability regards the use of MPI (also named multicore) parallelization method. This method, as we highlighted in an old post, is based on the MESH definition in the input FDS file. To achieve a good scalability it is fundamental you split your mesh in smaller parts so the cloudHPC cluster can easily assign part of the job to all the cores involved in the calculation.

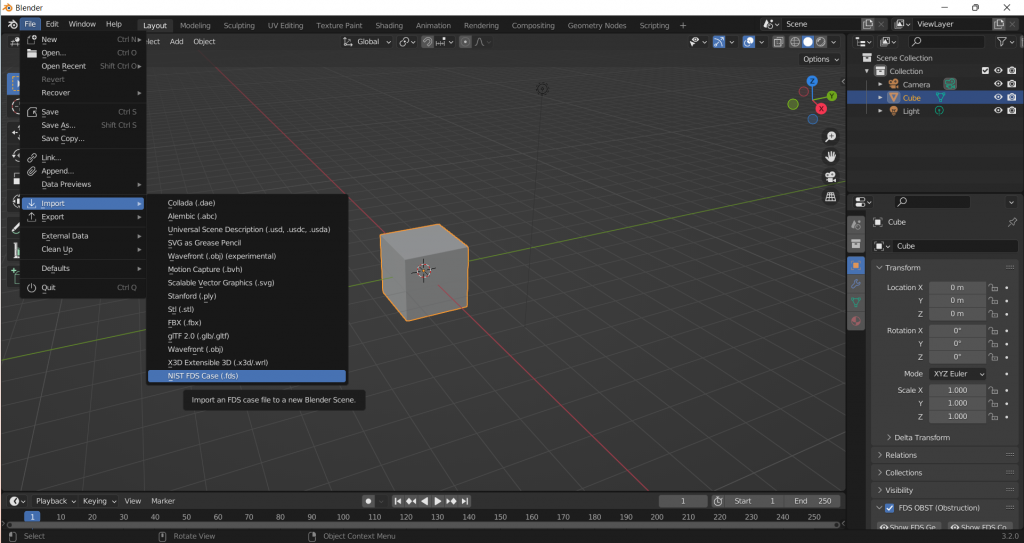

Import FDS file in BlenderFDS

BlenderFDS is a free and opensource software that can help the user into achieving this goal. After the FDS file generation using your preferred software, you can just import it into BlenderFDS by going to File->Import->NIST FDS Case.

Split FDS MESH elements

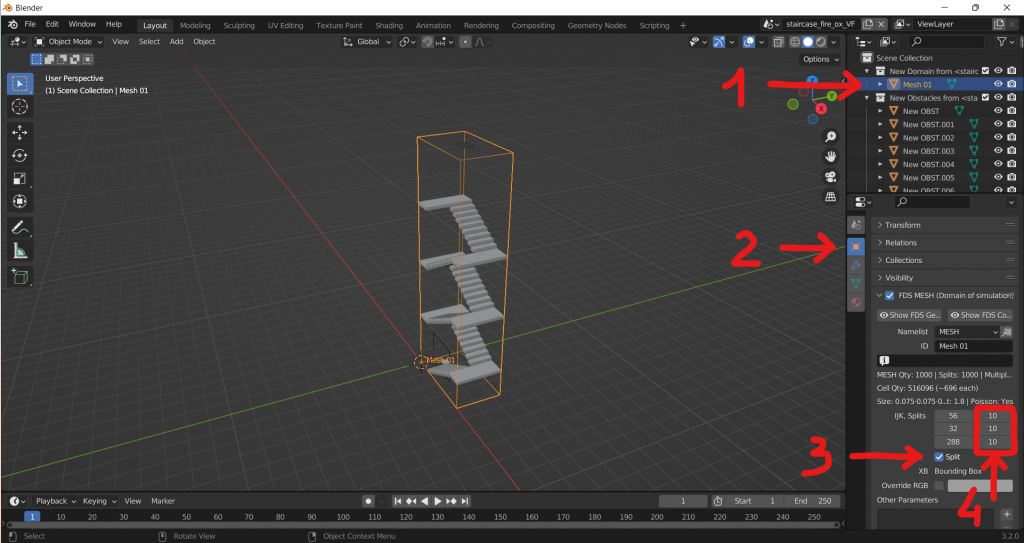

Once the file loads on the right hand side you have a list of all the elements BlenderFDS found on the input FDS file. Among this there is a list of MESH elements (as highlighted in point 1 of the following image). In our example we only had one MESH but it is possible that your file already has multiple meshes. In both cases, by selecting one MESH, you can edit them and activate the split option (point 2 and 3). With this feature BlenderFDS splits the mesh along X, Y and Z direction in a number of cut planes defined in point 4.

The number of splits depends on your starting mesh size and total cells number. We recommend not to split into MESH smaller than 10.000 cells.

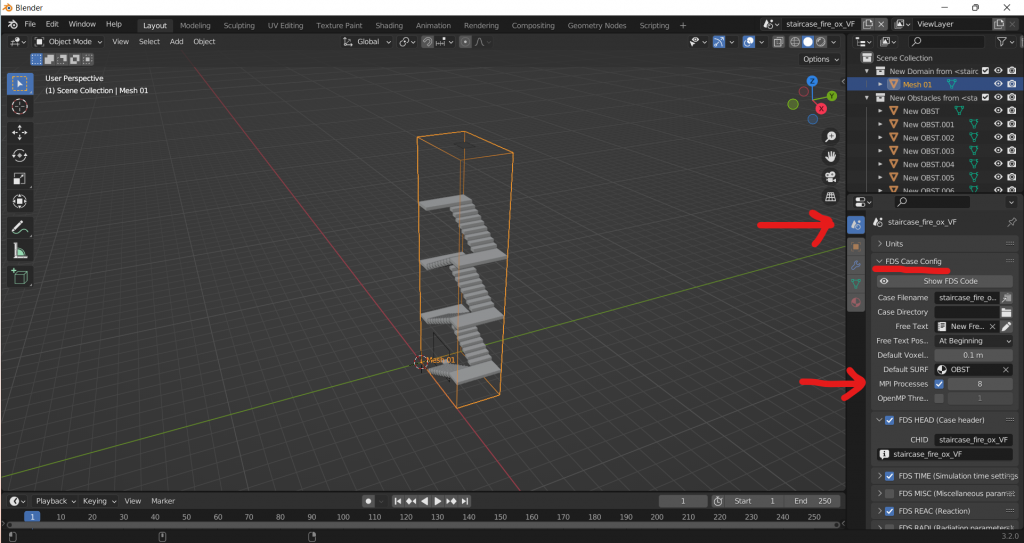

Now that you split all your meshes you can enter the detail of your simulation and set the number of MPI Process you want to use for your simulation.

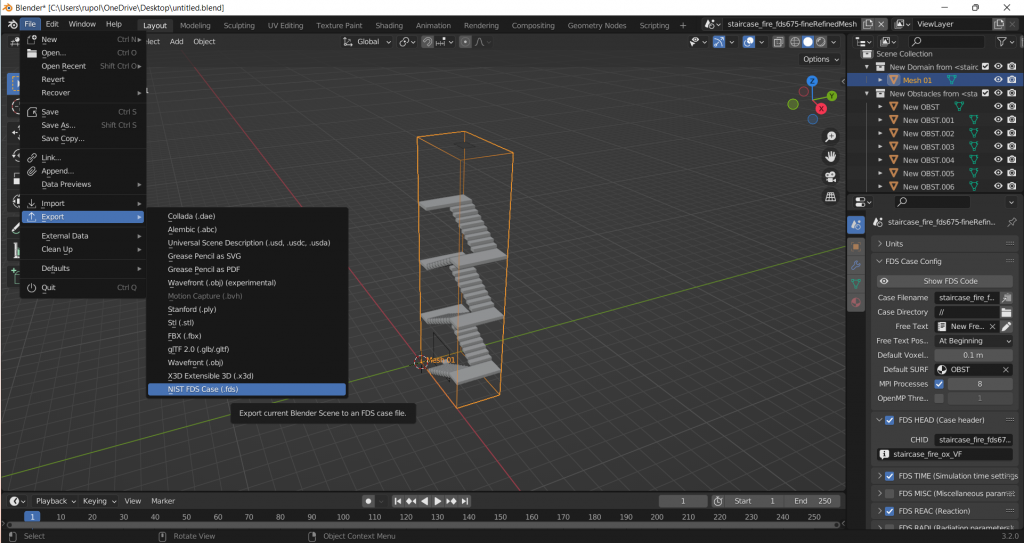

Eventually you can export your new FDS file which replace the original one. First of all save the blend file (File->Save) and then export the FDS file (File->Export->NIST FDS Case).

The output FDS file

If you wonder what happened in your FDS file well, you can open it using a text editor. If you check your MESH lines, you now notice BlenderFDS arrached them into sections, each of which is going to be assigned to a specific MPI_PROCESS.

--- Computational domain | MPI Processes: 8 | MESH Qty: 27 | Cell Qty: 516096

-- MPI Process: <0> | MESH Qty: 3 | Cell Qty: 58368

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s0' IJK=19,11,96 XB=0.000,1.425,0.000,0.825,0.000,4.080 MPI_PROCESS=0 /

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s11' IJK=19,11,96 XB=1.425,2.850,0.000,0.825,8.160,12.240 MPI_PROCESS=0 /

Cell Qty: 18240 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s8' IJK=19,10,96 XB=0.000,1.425,1.650,2.400,8.160,12.240 MPI_PROCESS=0 /

-- MPI Process: <1> | MESH Qty: 3 | Cell Qty: 58368

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s1' IJK=19,11,96 XB=0.000,1.425,0.000,0.825,4.080,8.160 MPI_PROCESS=1 /

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s12' IJK=19,11,96 XB=1.425,2.850,0.825,1.650,0.000,4.080 MPI_PROCESS=1 /

Cell Qty: 18240 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s15' IJK=19,10,96 XB=1.425,2.850,1.650,2.400,0.000,4.080 MPI_PROCESS=1 /

-- MPI Process: <2> | MESH Qty: 3 | Cell Qty: 58368

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s2' IJK=19,11,96 XB=0.000,1.425,0.000,0.825,8.160,12.240 MPI_PROCESS=2 /

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s13' IJK=19,11,96 XB=1.425,2.850,0.825,1.650,4.080,8.160 MPI_PROCESS=2 /

Cell Qty: 18240 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s16' IJK=19,10,96 XB=1.425,2.850,1.650,2.400,4.080,8.160 MPI_PROCESS=2 /

-- MPI Process: <3> | MESH Qty: 3 | Cell Qty: 58368

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s3' IJK=19,11,96 XB=0.000,1.425,0.825,1.650,0.000,4.080 MPI_PROCESS=3 /

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s14' IJK=19,11,96 XB=1.425,2.850,0.825,1.650,8.160,12.240 MPI_PROCESS=3 /

Cell Qty: 18240 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s17' IJK=19,10,96 XB=1.425,2.850,1.650,2.400,8.160,12.240 MPI_PROCESS=3 /

-- MPI Process: <4> | MESH Qty: 4 | Cell Qty: 75360

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s4' IJK=19,11,96 XB=0.000,1.425,0.825,1.650,4.080,8.160 MPI_PROCESS=4 /

Cell Qty: 19008 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s18' IJK=18,11,96 XB=2.850,4.200,0.000,0.825,0.000,4.080 MPI_PROCESS=4 /

Cell Qty: 19008 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s22' IJK=18,11,96 XB=2.850,4.200,0.825,1.650,4.080,8.160 MPI_PROCESS=4 /

Cell Qty: 17280 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s26' IJK=18,10,96 XB=2.850,4.200,1.650,2.400,8.160,12.240 MPI_PROCESS=4 /

-- MPI Process: <5> | MESH Qty: 3 | Cell Qty: 58080

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s5' IJK=19,11,96 XB=0.000,1.425,0.825,1.650,8.160,12.240 MPI_PROCESS=5 /

Cell Qty: 19008 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s19' IJK=18,11,96 XB=2.850,4.200,0.000,0.825,4.080,8.160 MPI_PROCESS=5 /

Cell Qty: 19008 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s23' IJK=18,11,96 XB=2.850,4.200,0.825,1.650,8.160,12.240 MPI_PROCESS=5 /

-- MPI Process: <6> | MESH Qty: 4 | Cell Qty: 74592

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s9' IJK=19,11,96 XB=1.425,2.850,0.000,0.825,0.000,4.080 MPI_PROCESS=6 /

Cell Qty: 19008 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s20' IJK=18,11,96 XB=2.850,4.200,0.000,0.825,8.160,12.240 MPI_PROCESS=6 /

Cell Qty: 18240 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s6' IJK=19,10,96 XB=0.000,1.425,1.650,2.400,0.000,4.080 MPI_PROCESS=6 /

Cell Qty: 17280 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s24' IJK=18,10,96 XB=2.850,4.200,1.650,2.400,0.000,4.080 MPI_PROCESS=6 /

-- MPI Process: <7> | MESH Qty: 4 | Cell Qty: 74592

Cell Qty: 20064 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s10' IJK=19,11,96 XB=1.425,2.850,0.000,0.825,4.080,8.160 MPI_PROCESS=7 /

Cell Qty: 19008 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s21' IJK=18,11,96 XB=2.850,4.200,0.825,1.650,0.000,4.080 MPI_PROCESS=7 /

Cell Qty: 18240 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s7' IJK=19,10,96 XB=0.000,1.425,1.650,2.400,4.080,8.160 MPI_PROCESS=7 /

Cell Qty: 17280 | Size: 0.075·0.075·0.042m | Aspect: 1.8 | Poisson: Yes

&MESH ID='Mesh 01_s25' IJK=18,10,96 XB=2.850,4.200,1.650,2.400,4.080,8.160 MPI_PROCESS=7 /CloudHPC is a HPC provider to run engineering simulations on the cloud. CloudHPC provides from 1 to 224 vCPUs for each process in several configuration of HPC infrastructure - both multi-thread and multi-core. Current software ranges includes several CAE, CFD, FEA, FEM software among which OpenFOAM, FDS, Blender and several others.

New users benefit of a FREE trial of 300 vCPU/Hours to be used on the platform in order to test the platform, all each features and verify if it is suitable for their needs

0 Comments