Run OpenFOAM docker on a SLURM cluster

Here’s a comprehensive guide on running OpenFOAM within Docker containers on a SLURM cluster, along with best practices and troubleshooting tips:

1. Prerequisites

- SLURM Cluster: A functioning SLURM cluster with access to compute nodes.

- Docker Engine: Docker Engine must be installed and configured on each compute node within your SLURM cluster.

- OpenFOAM Image: A suitable Docker image containing your desired OpenFOAM version, libraries, and dependencies. You can build a custom image or use a pre-built one from Docker Hub (e.g.,

openfoam/openfoam-latest). - Your OpenFOAM Case: The directory containing your OpenFOAM simulation case files (e.g.,

system,constant,0, etc.). - SLURM Script: You’ll need a SLURM script to submit your job to the cluster.

2. Creating a Docker Image (if needed)

- Dockerfile: Create a

Dockerfileto define your OpenFOAM image. Example:

FROM openfoam/openfoam-latest

WORKDIR /home/openfoam

# Install additional packages (if needed)

RUN apt-get update && apt-get install -y python3 python3-pip

# Copy your case files

COPY . /home/openfoam/my_case

# Define the entrypoint (your OpenFOAM command)

ENTRYPOINT ["/opt/openfoam/bin/foamRun", "simpleFoam"]

- Build the image:

docker build -t my-openfoam-image .

3. Writing the SLURM Script

#!/bin/bash

#SBATCH --job-name=my_openfoam_job

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=1

#SBATCH --cpus-per-task=4

#SBATCH --mem=8G

#SBATCH --time=0-10:00

#SBATCH --output=my_openfoam_job-%j.out

#SBATCH --error=my_openfoam_job-%j.err

# Load Docker environment

module load docker

# Run the Docker container

docker run -it -v $(pwd)/my_case:/home/openfoam/my_case my-openfoam-image

Explanation of the Script:

- Header: This section specifies job details (name, resources, time, etc.).

- Load Docker: Loads the Docker module on your cluster.

docker run: Starts a Docker container.-it: Runs interactively and attaches the terminal.-v: Mounts your case directory inside the container. This makes your case files available within the container.my-openfoam-image: The name of your Docker image.

4. Submitting the Job

sbatch my_openfoam_script.sh

Important Considerations:

- Mount Points: Ensure you are correctly mounting your case directory inside the container to give OpenFOAM access to the files it needs.

- Resources: Carefully allocate resources like CPU cores, memory, and time. Overestimation can waste resources, while underestimation can lead to job failure.

- Image Size: Keep your Docker image as small as possible to reduce transfer times and storage overhead.

- Parallelism: Consider how to effectively parallelize your simulations within the Docker container.

- Network Access: If your simulations require network access (e.g., for external data sources or visualization tools), you’ll need to configure your container to allow network access.

- Cleaning Up: Consider automating the cleanup of the container and any generated data after your job completes.

Example with Pre-built Image:

You can use a pre-built OpenFOAM image without creating your own:

#!/bin/bash

#SBATCH ... (as above)

module load docker

# Use the openfoam/openfoam-latest image

docker run -it -v $(pwd)/my_case:/home/openfoam/my_case openfoam/openfoam-latest

Troubleshooting:

- Check Logs: Inspect the output files (

my_openfoam_job-%j.outandmy_openfoam_job-%j.err) to troubleshoot problems. - SLURM Errors: Look for error messages from SLURM itself.

- Docker Errors: Check the Docker logs for problems starting the container or executing commands.

Advanced Techniques:

- Singularity: If you’re looking for a more lightweight alternative to Docker, Singularity can be a good choice.

- MPI: Use MPI within your OpenFOAM simulations for parallel computing.

- GPU Acceleration: Utilize GPUs for faster simulations by using specialized OpenFOAM images with GPU support.

Let me know if you have any further questions or specific requirements for your OpenFOAM setup!

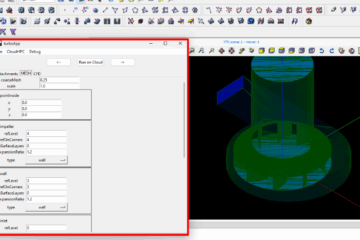

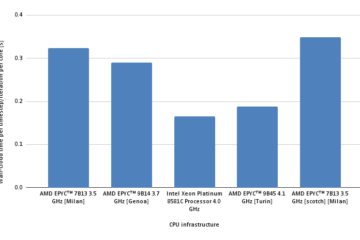

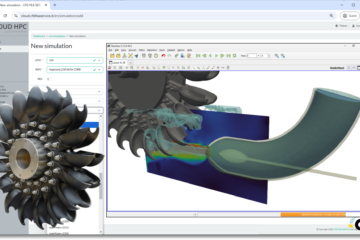

CloudHPC is a HPC provider to run engineering simulations on the cloud. CloudHPC provides from 1 to 224 vCPUs for each process in several configuration of HPC infrastructure - both multi-thread and multi-core. Current software ranges includes several CAE, CFD, FEA, FEM software among which OpenFOAM, FDS, Blender and several others.

New users benefit of a FREE trial of 300 vCPU/Hours to be used on the platform in order to test the platform, all each features and verify if it is suitable for their needs