How to reach good scalability in FDS

To speed up complex fire simulations in FDS it’s important to achieve a good scalability and equilibrate the hardware resources. Fire simulations commonly leads to long calculation time due to the large number of physical and chemical processes involved.

In complex fire simulation the available hardware carries out a high number of calculations. Depending on the magnitude of the analysis, if the hardware available is not sufficient the calculation time will be long.

To reduce the simulation time, it is necessary to increase the number of hardware resources available.

This concept can be easily simplified by the following example. If we run a FDS simulation using 4 CPUs the time needed for the simulation to run will be equal to ¼ of the time required to run the same simulation with only 1 CPU.

Hence, it is possible to speed up our simulations increasing the amount of hardware (CPUs) involved in the problem.

Thanks to CLOUD HPC you can use the power of a cluster to run your engineering analyses. Directly select from the webapp the amount of CUP and RAM needed to run and speed up your simulations. If interested, you can register here and get 300 free vCPU/hrs.

Here we will give you a simplified overview on how to reach good scalability in FDS. Starting from the understanding of scalability efficiency behaviour, FDS parallel processing aproches, to viewing in more detail the MPI strategy. To finally evaluate how to equilibrate your simulation workload through the available hardware.

Scalability Efficiency

Ideally, the scalability efficiency is directly proportional to the number of CPU involved. Hence the efficiency improves in a linear way, with the increase of the number of CPU. Although, the scalability will be efficient, and its speed up will increase, till a certain point. In that moment, with the further expanding of hardware resource the speed up would stop or even reduce. This behaviour is called hyperthreading. In particular, hyperthreading happens when an unnecessary amount of hardware is assigned to a single mesh, which reduces the performance.

The scalability speed up can be quantified as the relation between the required simulation time completed with1 CPU over the simulation time achieved with a number ‘n’ of CPU [1]:

S = T1 / Tn

The following formula helps us to evaluate how much the scalability is efficent. Where S is the scalability and N is the number of CPU:

E= S / N =T1 / (N x Tn)

The limit at which the efficiency stop growing depend on the type of model, set up and meshes size. Larger and more complex problems allows you to use more hardware resources, without reducing the scalability performance.

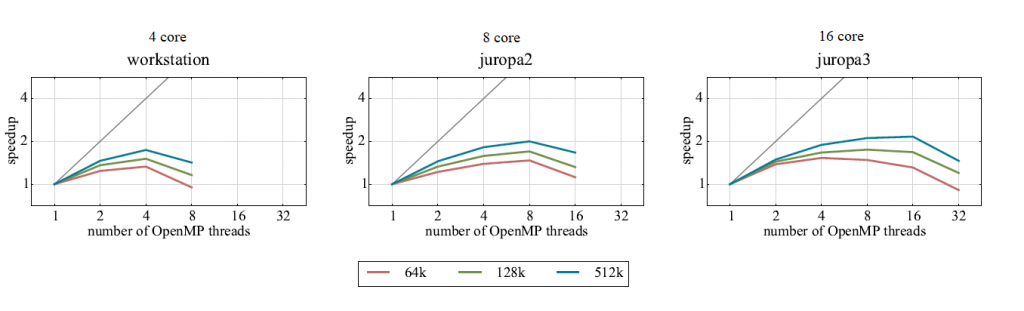

Below it is represented a case study [2] in which three different meshes, respectively with 64k, 128k, and 512k cells, are runner with three different computers configurations, 4 cores, 8 cores and 16 cores. It is clear from the graph that in all three cases, the speed up rises up to a factor of two and then decreases.

Parallel Processing

FDS allows you to speed up the calculation process with parallel processing strategies. These strategies allow you to reduce the calculation time by running the part of the problems simultaneously.

In FDS there are two main different strategies for parallel processing [1]:

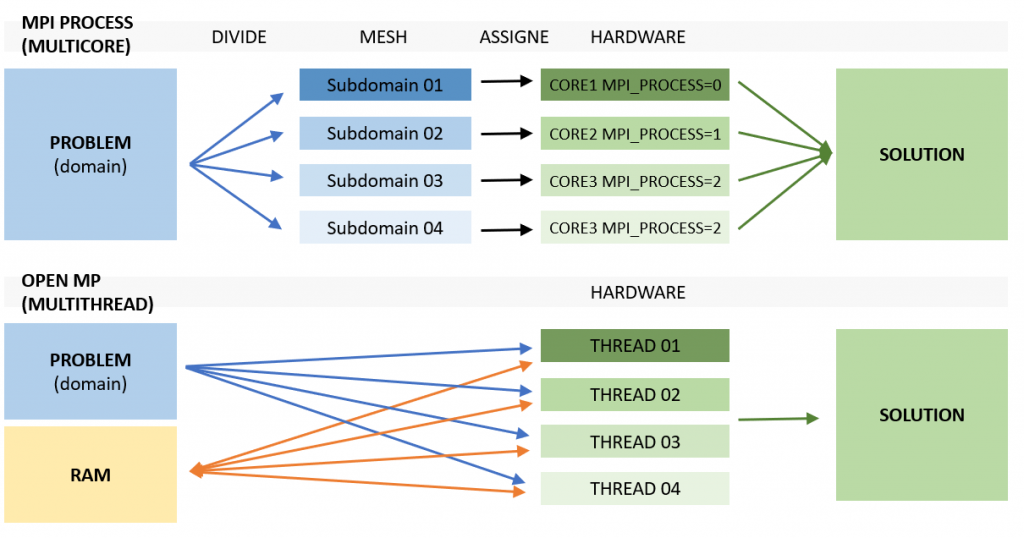

- MPI PROCESS (MULTICORE). The MPI Process allows you to assign part of a problem, such as a mesh, to a single processor. Every core will solve its subdomain in parallel, simultaneously, and finally combine the results.

- OPEN MP (MULTITHREADS). The Open Multi-Processing, or OperMP, do not require to split the computational domain into multiple meshes, while the simulation can be assigned to multiple threads which will work at the same time in a coordinated way. Hence, the single computer is able to run a single or multiple FDS simulation on multiple cores.

MPI Process

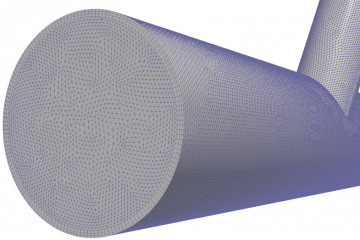

To understand better the MPI process approach imagine to divide a computational domain into multiple meshes and assign each mesh to its own processor.

Every core will complete its simulation, obtains its results and combined them into a unique solution by means of the communication library Message Passing Interface (MPI) [3]. The processes used in the simulation can derive from a single computer or from a network.

The MPI is able to transfer the information through the subdomains. It is possible to assign a single MPI process to a single mesh or multiple meshes.

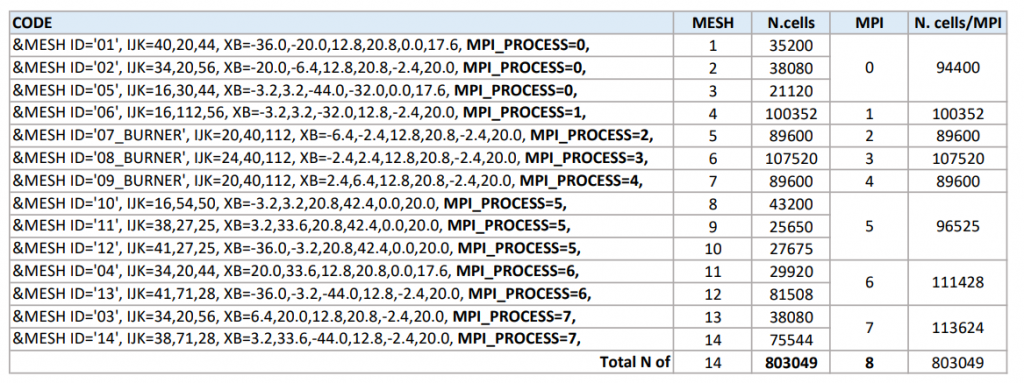

Therefore, once the domain is divided in different meshes, it is important to assign every mesh to one processor (MPI_PROCESS). In FDS the command MPI_PROCESS allows you to assign a mesh to a certain CPU. FDS refers to the meshes by numbers starting from 1 while MPI refers to its process by number starting from 0. With this method is possible to assign one mesh to one single core, or more meshes to one core.

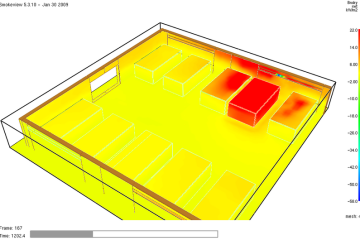

Equilibrate the workload

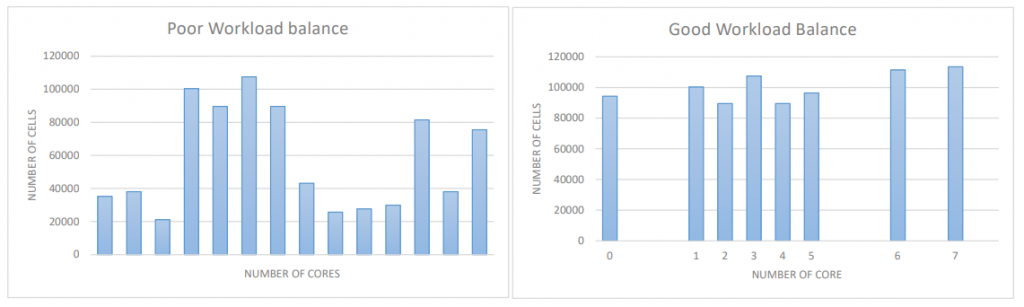

Each mesh could require a different amount of workload, depending on the level of results accuracy wanted, the number of cells of the mesh and their dimensions. For example, a mesh in which the fire initiate is composed by a higher number of smaller cells and higher calculus precision respect to a mesh positioned far away from the fire where less detailed calculations and larger cells are needed. It is a good rule of thumb to assign at least 10/20.000 cells to each core.

To increase the calculation performance and to equilibrate the workload between the processors, it is best to attribute meshes with a similar number of cells to each CPU in order to let them work in parallel for the same duration. This approach is important because, if one processor is working on a mesh with higher amount of cell respect to another processor, the first will take a longer calculation time than the second CPU. The CPU with a lower number of cells and consequently with lower workload, will finish the simulations earlier, and therefore wasted its capacity. Of course the fact that some meshes are computing the fire and others not, can affect the scalability, but equilibrating the workload between processor is still a good starting point.

The scalability achieved with the OpenMP strategy will be considerably smaller than the one obtained with the use of MPI. Although, the MPI solution requires to broken up the domain and assign single meshes to each processor manually. FDS is able to use both approaches simultaneously.

Finally, FDS collects the diagnostics for a given calculation into a file called CHID.out.

If you are interested to know more about the scalability of a FDS simulation and its efficiency, we suggest to check out this webinar.

Bibliography

[1] K. Mcgrattan et al, “Fire Dynamics Simulator User’s Guide Sixth Edition,” 2019

[2] Daniel Haarhoff, “Performance Analysis and Shared Memory Parallelisation of FDS”, 2014

[3] Dr. Susanne Kilian, “Numerical Insights Into the Solution of the FDS Pressure Equation: Scalability and Accuracy”, 2014

CloudHPC is a HPC provider to run engineering simulations on the cloud. CloudHPC provides from 1 to 224 vCPUs for each process in several configuration of HPC infrastructure - both multi-thread and multi-core. Current software ranges includes several CAE, CFD, FEA, FEM software among which OpenFOAM, FDS, Blender and several others.

New users benefit of a FREE trial of 300 vCPU/Hours to be used on the platform in order to test the platform, all each features and verify if it is suitable for their needs

4 Comments

Choosing RAM: tailor it to your FDS model - Cloud HPC · 20 June 2022 at 9:20 am

[…] per exploited in the issue “How to reach good scalability in FDS” increasing the number of CPU available to run your simulation reduces the run […]

FDS meshing using the MPI PROCESS: a little guide - Cloud HPC · 2 November 2022 at 11:42 am

[…] and to understand how to balance the workload, check out the dedicated post available “How to reach good scalability in FDS”. While, this post aims to explain how to allocate each FDS mesh to its MPI […]

CloudHPC - FDS cloud cost - Cloud HPC · 28 November 2022 at 10:19 am

[…] set-up. In the past, we talked about scalability in FDS. Following instructions provided in those posts or in our guide […]

Common FDS MPI_PROCESS errors - Cloud HPC · 17 February 2023 at 11:20 am

[…] the past we highlight how you can set your FDS analysis correctly to reach a good scalability. Often this involves using the MPI_PROCESS parameter to correctly split […]